|

Almost Timely News: 🗞️ 2025 AI Year in Review (2025-12-14) :: View in Browser

The Big Plug

🚨 Watch my latest keynote, How to Successfully Apply AI in Financial Aid, from MASFAA 2025.

Content Authenticity Statement

100% of this week’s newsletter was generated by me, the human. I did the spoken word version first, then used Google Gemini 3 to clean up the transcript. Learn why this kind of disclosure is a good idea and might be required for anyone doing business in any capacity with the EU in the near future.

Watch This Newsletter On YouTube 📺

Click here for the video 📺 version of this newsletter on YouTube »

Click here for an MP3 audio 🎧 only version »

What’s On My Mind: The 2025 AI Year in Review

Let’s do the 2025 AI wrapped—the year in review for generative AI.

Normally for the newsletter, I spend the time writing it first and then basically just read it aloud. We’re going to do things a little bit differently. This is derived in part from a talk I did this past week for my friends over at Joist. In that talk, I tailored it for them and their audience. I love talking to folks about this stuff, but I did have to pull back on some of the content. This wasn’t because it was anything bad, but because a lot of what I care about, the super nerdy stuff, is just not appropriate for most audiences who want things that will help them do their jobs better right now.

This newsletter and my platforms are basically the stuff that I do for fun, for me. So, I’m going to do my version of this talk as if I were talking to myself or someone like me who’s okay with getting lost in the weeds and getting super nerdy.

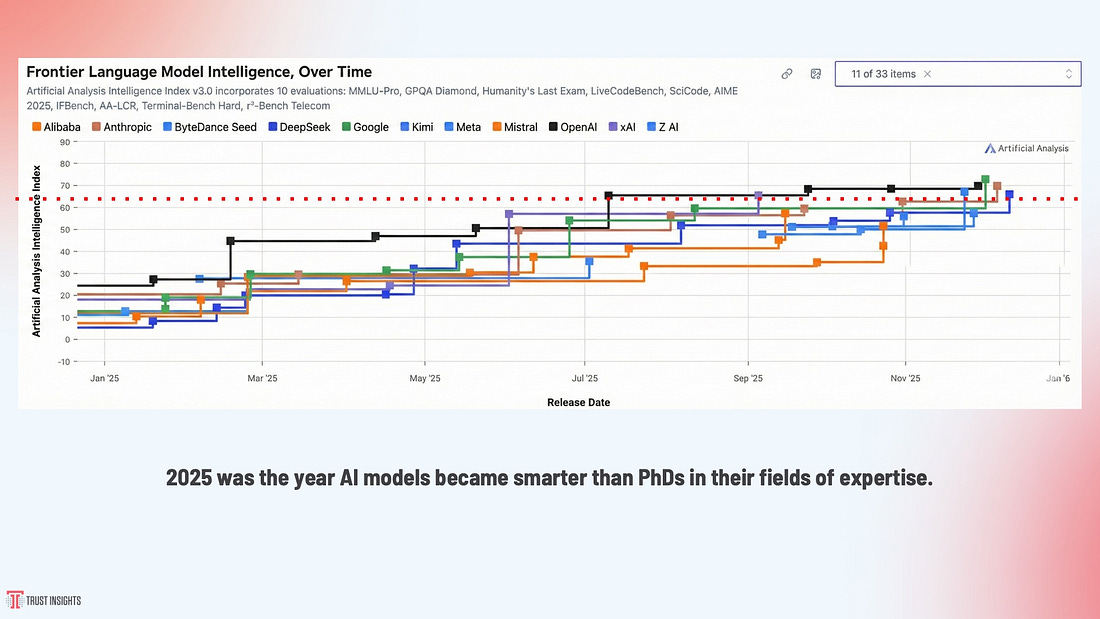

The Year of Intelligence

With that, let’s talk about the year that was. 2025 was the year that AI models became smarter than PhDs in their fields of expertise on several benchmarks, not just one. This chart here is from Artificial Analysis, which is one of my personal favorite sites for keeping an eye on what’s going on with AI models. Artificial Analysis does a nice job of gathering data and presenting it in visualizations. This shows frontier language models’ intelligence over time.

What you see is at the beginning of 2025, most models were scoring anywhere from 5 to 25 points on this hybrid index. Most of the tests used to evaluate AI models are multiple-choice. You can generally expect a human being outside their field of expertise to score around a 20 or 25, basically no better than random guessing. If you and I were to take one of these tests and not know the answer, we could just choose “C” for the entire session and be right about 25% of the time. That’s kind of where AI started the year. Even the very best models, like GPT-4.0 or Gemini 2, still scored pretty low on this hybrid index of tests.

On this consortium of tests, a human expert in their field would be expected to score around a 65%. A PhD knows their stuff, knows the field. What you see here is that the first model to crack that ceiling was OpenAI’s GPT-5 in July of 2025. When that model came out, it was a big jump. There were other jumps throughout the year with GPT-4.1, GPT-4.5, and so on.

Shortly after that, you saw companies like ByteDance, Kimi, and Moonshot make their leaps. Then, of course, towards the end of the year, you saw Anthropic’s Claude Opus 4.5 and Google’s Gemini 3. Gemini 3 came out swinging hard. It is currently the smartest model available on the market. What’s important here is that all the foundation models are ending the year substantially smarter than they started. We’re talking about going from a face-rolling moron to a PhD inside of a year. That is just mind-melting when you think about how fast a technology can evolve. Human beings can’t do that.

If we look back in time, a test called Humanity’s Last Exam debuted in January. It’s a reasoning and knowledge test that is not multiple-choice; it has freeform answers. The test is designed to be something that an expert would know but a non-expert would have a difficult time Googling. There’s one question, which we’ll see in a little bit, that I would have no idea how to even Google for. Humanity’s Last Exam came out in January 2025, so this is a nice snapshot of the year.

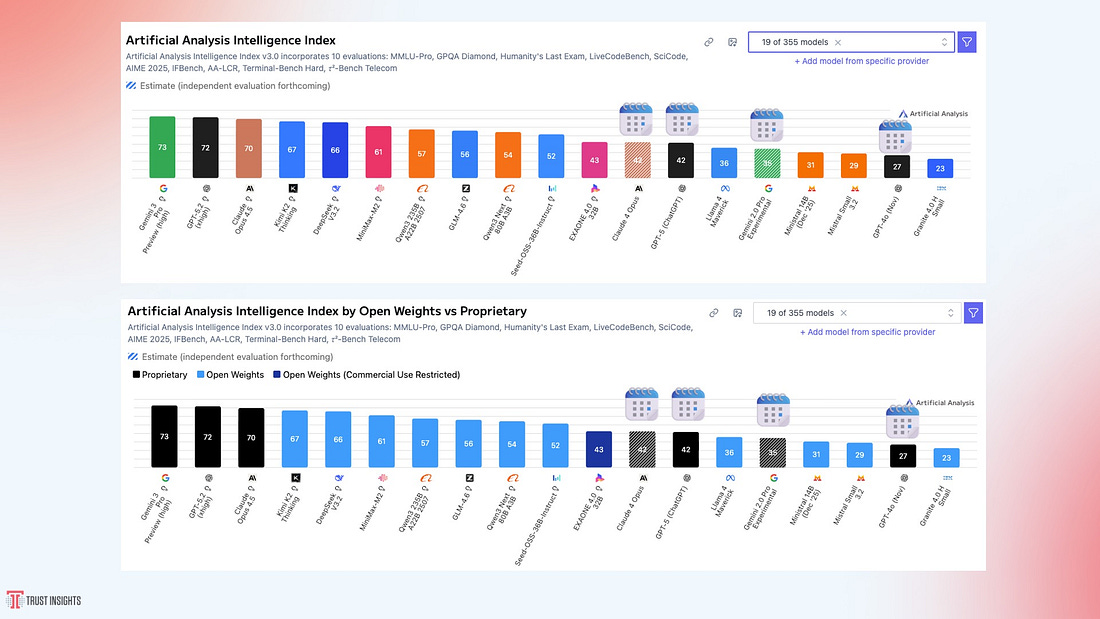

We see GPT-4.0 scored a 5% on the test, and then GPT-5.1, which was the previous top model from OpenAI until GPT-5.2 came out this past week, scored 26.5%. Claude started the year with Opus 3 at 3.1% and ended the year at almost 29%—that’s a 9x improvement. Gemini started the year at 6.8% for its Pro model and ended the year at 37.2%, a 5x improvement. DeepSeek started the year at 5.2% and ended at 22%, a 4x improvement. Inside of a year, on the toughest exam, AI made massive gains—4x, 5x, 9x smarter than at the beginning of the year.

This isn’t just closed, proprietary models, which are denoted in black on the bottom chart. There are also open models that you can download onto your own hardware. Now, some of them, like DeepSeek, require a lot of hardware—you need $50,000 worth to run it because it’s such a big model. But some of them, like XO1 from the appliance maker LG, you can run on a laptop. Qwen3 Next from Alibaba, you can run on a beefy laptop; I run that on my MacBook. It is a hefty model that consumes a lot of resources, but you can run it. Qwen3, the 235-billion-parameter model, you can’t really run on a laptop. Same for Minimax and Kimi K2. But Seeds from ByteDance, you can run that on a laptop. XO1 you can run on a laptop, Llama 4 Maverick you can run on a laptop.