| | In this edition, why the spat between David Sacks and The New York Times isn’t worth it, and Mistral͏ ͏ ͏ ͏ ͏ ͏ |

| |  | Technology |  |

| |

|

- Mini Mistrals

- Amazon’s chip race

- Microsoft’s momentum challenge

- What makes AI safe?

- Apple resists India order

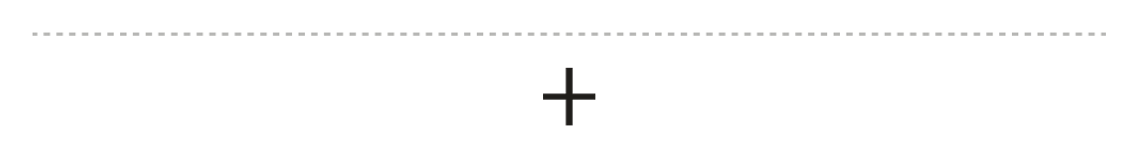

Reed’s view on a New York Times report about David Sacks, and an AI model aimed at shaking up political seats. |

|

Is there anything less interesting than ginned-up fights between East Coast media and Silicon Valley leaders? Both sides win, of course — but the rest of us should really do our best to ignore them. I find I can’t today because, five months ago, The New York Times dispatched five reporters (with the help of four other staffers) to investigate potential conflicts of interest involving AI Czar and venture capitalist David Sacks. Eventually, they shared their reporting with Sacks, who changed their minds on some of what they’d been told. The story that emerged was favorable for Sacks. It contains no allegations of wrongdoing, but details his investments in companies that could stand to gain indirectly from a pro-AI and pro-crypto policy stance. That’s not big news — it’s literally why he’s serving in that role. The piece was a bit of a dud as far as revealing investigations go, but the Times delivered its reporting with a good-government worldview that frowns upon such things. This is the kind of criticism that comes with high-profile political service, in a pretty mild form. Sacks responded with theatrical outrage at the Times and a threat to sue. He may have pleased his boss, and he whipped up some outrage among Trump supporters. But his reaction rescued a Times story that would otherwise have gone mostly unread. Win-win, except for any attempt at a serious conversation about tech or policy. |

|

Mistral’s CEO Arthur Mensch. Benoit Tessier/Reuters. Mistral’s CEO Arthur Mensch. Benoit Tessier/Reuters.Mistral, the French AI company and champion of “open weights,” released a new family of AI models Tuesday whose most interesting feature is how small they are: lean enough to run on smartphones. “The bottom line is that we managed to squeeze much more performance into a small number of parameters,” Mistral Co-founder Guillaume Lample told Semafor. Its smallest new model, Ministral3 3B, is three billion parameters — tiny by large language model standards, which can run in the trillions — and the company says it can outperform some models four times its size. We’ve only scratched the surface on how the new crop of small AI models can be used on local hardware — with no internet connection necessary. These models offer an opportunity to revive the consumer gadget space by leveraging off-the-shelf hardware and free, open AI models. |

|

AWS’ race to replace chips |

Courtesy of Amazon Courtesy of AmazonAmazon unveiled its third-generation Trainium AI chip Tuesday at its annual conference in Las Vegas. It’s a big jump, the company said, from its second version — but just wait for Trainium4, presumably next year. The rapid iteration could be a financial problem: Investors worry AI chips in data centers are going to depreciate faster than companies hope. The Trainum3 rollout offers a glimpse at another puzzle: The difficulty of separating individual chips from an entire data center working as a complete system. Amazon has configured its data centers so that old Trainium2 chips can be yanked out and replaced with new Trainium3 chips without other major changes. Indeed, many of the gains come not from the chips themselves, but in how they are connected. Not only are the latest Trainium racks using a new (somewhat secretive) kind of interconnect between server racks, but up to 144 chips inside the individual racks are also connected, allowing each chip to talk directly to another chip. In the past, they had to make multiple “hops” to do it. The AI industry’s short sellers could be right about the chips depreciating faster than expected. But companies are also not being completely transparent about how these data centers actually work, and for good reason — those are valuable trade secrets. |

|

The dual mandate at Microsoft Research |

| |  | Reed Albergotti |

| |

Semafor/YouTube Semafor/YouTubeThe annual Conference on Neural Information Processing Systems kicks off in San Diego this week at an odd time for AI research, as money, energy, and talent disappear into the private sector, where new research is increasingly kept secret. Peter Lee, who heads Microsoft Research, weighed the dilemma facing company researchers in a wide-ranging interview that you can watch on Semafor’s YouTube channel. “If you are successful at creating an internal disruption, that actually harms our business. That’s the point of disruption. On the other hand, if you don’t do it and the competitor does it, you’ll get blamed for missing it,” he said. Microsoft certainly hasn’t missed it. It oriented its company around AI, making a massive bet on OpenAI long before ChatGPT came out. But what’s next? And how does a company like Microsoft stay focused on the next disruptive idea in the lab while fighting for survival in the high-stakes AI race? Quietly, the company has snuck into the final stage of DARPA’s Quantum Benchmarking Initiative, where the agency vets the scalability of different quantum ideas. If Microsoft’s Majorana quantum processor is successful or quantum computers can be built at scale, CEO Satya Nadella might be forced yet again to bet the future on something fresh out of the research lab. As Lee told me, “Stuff will change really rapidly.” Listen or watch the full interview with Lee here. |

|

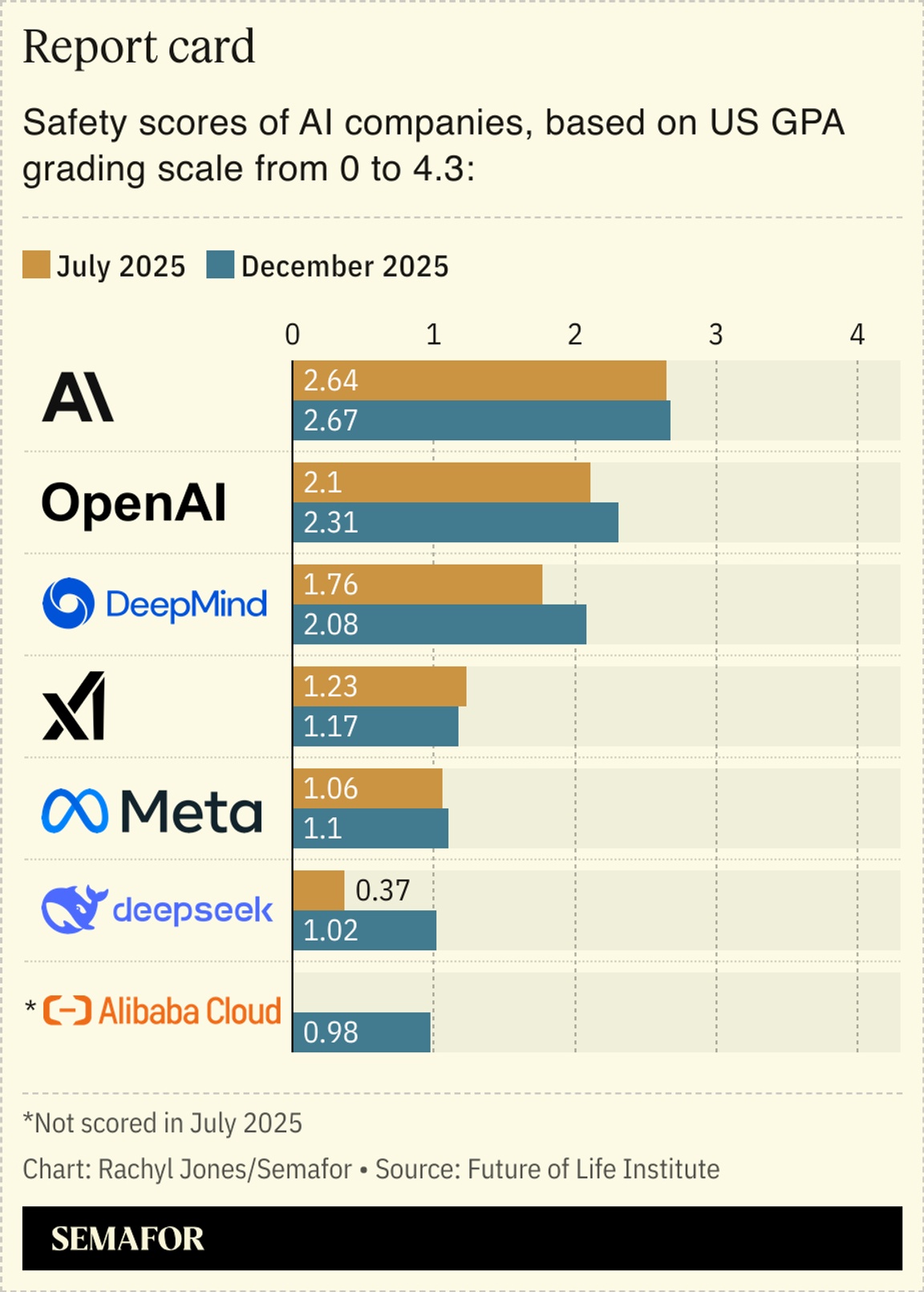

AI safety proves tricky to gauge |

So what is AI safety, and how safe are the brand-name models? A new report from the Future of Life Institute seeks to answer those questions, and its biggest revelation appears to be that DeepSeek got a lot safer in the last six months. But when you dig in, it’s only because two employees have spoken about AI risks on panels, and DeepSeek updated a report from when it launched with additional insight into how the model works. The safety report does offer a useful framing of how differently the US and China approach AI risks, with the US focused on publishing safety frameworks and other disclosures, and China putting more effort into regulations that “give Chinese firms stronger baseline accountability,” FLI wrote.  |

|

Apple reportedly refuses to install India’s app |

Francis Mascarenhas/File Photo/Reuters Francis Mascarenhas/File Photo/ReutersBack door closed. Apple won’t comply with an order in India mandating smartphones push a state-owned cyber safety app onto devices, Reuters reported. The requirement, shared confidentially with smartphone providers last week, has been the subject of political outcry over fears the government could track users or access their data. The government said it hoped the app, which allows users to track stolen phones and report fraudulent calls, would help mitigate scams and regulate the second-hand device market, Reuters reported. Apple hasn’t historically allowed the preinstallation of government or third-party apps on its phones, a stance made famous by the company’s battle with the FBI over a phone belonging to the suspect in the San Bernardino terrorist attack. Such a move would be a major shift in how it handles its systems and could erode consumer trust. Complying could also encourage other governments to try similar actions. The mandate by India also upsets the unwritten détente between the makers of smartphone operating systems and law enforcement agencies around the world. The FBI dropped its attempt to force Apple to unlock the shooter’s phone when an Australian firm, Azimuth Security, hacked it. Since then, a cottage industry has sprung up catering to law enforcement and national security services. The result is that it’s fairly easy for anyone with deep pockets to hack into any phone, but the cost makes it impractical to hack into everyone’s phone. Abuses still occur, but not on a massive scale. An official back door for governments would allow more comprehensive surveillance by countries like India. |

|

More than 200,000 execs, founders, and innovators rely on Mindstream to cut through the AI noise. They deliver powerful insights, real-world applications, and breakthrough updates — all in a quick, daily read. No fluff. No cost. Just your unfair advantage in the AI era. Don’t fall behind — read Mindstream. |

|

Fred Greaves/Reuters Fred Greaves/ReutersAI can help achieve many goals nowadays, even if that goal is disrupting the US electoral system. The Independent Center, a nonprofit representing independent voters in the US, is using AI to identify districts where non-party candidates could win seats in the House of Representatives, NPR reported. It’s a very AI-heavy vision: The center’s proprietary AI reviews voter participation rates, ages, and topics of concern, which helps the nonprofit create a picture of an ideal political candidate. The organization then searches through its network of interested politicians, even on LinkedIn, to find candidates primed to meet the needs of specific districts. |

|

| |  | | | You’re receiving this email because you signed up for briefings from Semafor. |

|