|

😘 Kiss bugs goodbye with fully automated end-to-end test coverage (Sponsored)

Bugs sneak out when less than 80% of user flows are tested before shipping. However, getting that kind of coverage (and staying there) is hard and pricey for any team.

QA Wolf’s AI-native service provides high-volume, high-speed test coverage for web and mobile apps, reducing your organizations QA cycle to less than 15 minutes.

They can get you:

24-hour maintenance and on-demand test creation

Zero flakes, guaranteed

Engineering teams move faster, releases stay on track, and testing happens automatically—so developers can focus on building, not debugging.

Drata’s team of 80+ engineers achieved 4x more test cases and 86% faster QA cycles.

⭐ Rated 4.8/5 on G2

Disclaimer: The details in this post have been derived from the articles/videos shared online by the Facebook/Meta engineering team. All credit for the technical details goes to the Facebook/Meta Engineering Team. The links to the original articles and videos are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them.

Facebook didn’t set out to dominate live video overnight. The platform’s live streaming capability began as a hackathon project with the modest goal of seeing how fast they could push video through a prototype backend. It gave the team a way to measure end-to-end latency under real conditions. That test shaped everything that followed.

Facebook Live moved fast by necessity. From that rooftop prototype, it took just four months to launch an MVP through the Mentions app, aimed at public figures like Dwayne Johnson. Within eight months, the platform rolled out to the entire user base, consisting of billions of users.

The video infrastructure team at Facebook owns the end-to-end path of every video. That includes uploads from mobile phones, distributed encoding in data centers, and real-time playback across the globe. They build for scale by default, not because it sounds good in a deck, but because scale is a constraint. When 1.2 billion users might press play, bad architecture can lead to issues.

The infrastructure needed to make that happen relied on foundational principles: composable systems, predictable patterns, and sharp handling of chaos. Every stream, whether it came from a celebrity or a teenager’s backyard, needed the same guarantees: low latency, high availability, and smooth playback. And every bug, every outage, every unexpected spike forced the team to build smarter, not bigger.

In this article, we’ll look at how Facebook Live was built and the kind of challenges they faced.

How Much Do Remote Engineers Make? (Sponsored)

Engineering hiring is booming again: U.S. companies with revenue of $50 million+ are anticipating a 12% hiring increase compared with 2024.

Employers and candidates are wondering: how do remote software engineer salaries compare across global markets?

Terminal’s Remote Software Engineer Salary Report includes data from 260K+ candidates across Latin America, Canada and Europe. Employers can better inform hiring decisions and candidates can understand their earning potential.

Our hiring expertise runs deep: Terminal is the smarter platform for hiring remote engineers. We help you hire elite engineering talent up to 60% cheaper than U.S. talent.

Core Components Behind Facebook Video

At the heart of Facebook’s video strategy lies a sprawling infrastructure. Each component serves a specific role in making sure video content flows smoothly from creators to viewers, no matter where they are or what device they’re using.

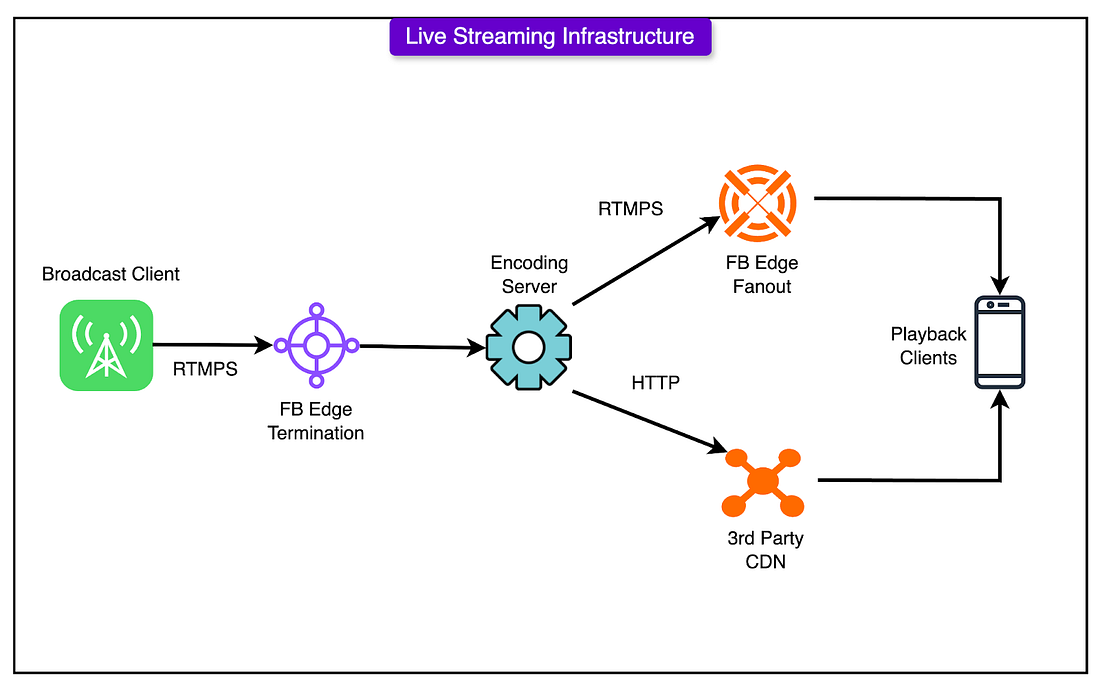

See the diagram below that shows a high-level view of this infrastructure:

Fast, Fail-Tolerant Uploads

The upload pipeline is where the video journey begins.

It handles everything from a celebrity’s studio-grade stream to a shaky phone video in a moving car. Uploads must be fast, but more importantly, they must be resilient. Network drops, flaky connections, or device quirks shouldn’t stall the system.

Uploads are chunked to support resumability and reduce retry cost.

Redundant paths and retries protect against partial failures.

Metadata extraction starts during upload, enabling early classification and processing.

Beyond reliability, the system clusters similar videos. This feeds recommendation engines that suggest related content to the users. The grouping happens based on visual and audio similarity, not just titles or tags. That helps surface videos that feel naturally connected, even if their metadata disagrees.

Encoding at Scale

Encoding is a computationally heavy bottleneck if done naively. Facebook splits incoming videos into chunks, encodes them in parallel, and stitches them back together.

This massively reduces latency and allows the system to scale horizontally. Some features are as follows: