|

Real-Time AI at Scale Masterclass: Virtual Masterclass (Sponsored)

Learn strategies for low-latency feature stores and vector search

This masterclass demonstrates how to keep latency predictably low across common real-time AI use cases. We’ll dig into the challenges behind serving fresh features, handling rapidly evolving embeddings, and maintaining consistent tail latencies at scale. The discussion spans how to build pipelines that support real-time inference, how to model and store high-dimensional vectors efficiently, and how to optimize for throughput and latency under load.

You will learn how to:

Build end-to-end pipelines that keep both features and embeddings fresh for real-time inference

Design feature stores that deliver consistent low-latency access at extreme scale

Run vector search workloads with predictable performance—even with large datasets and continuous updates

This week’s system design refresher:

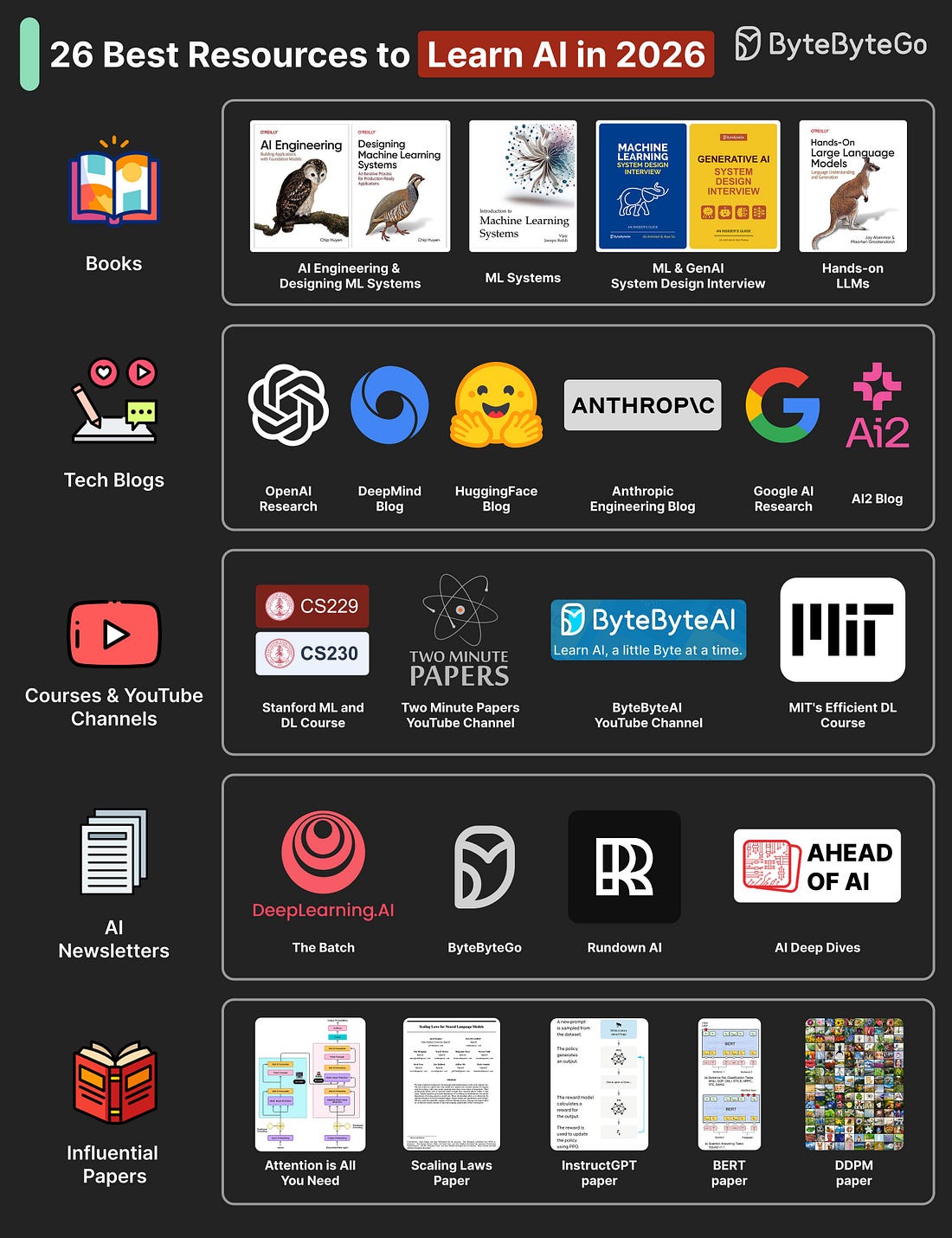

Best Resources to Learn AI in 2026

The Pragmatic Summit

Why Prompt Engineering Makes a Big Difference in LLMs?

Modern Storage Systems

🚀 Become an AI Engineer Cohort 3 Starts Today!

Best Resources to Learn AI in 2026

The AI resources can be divided into different types such as:

Foundational and Modern AI Books

Books like AI Engineering, Machine Learning System Design Interview, Generative AI System Design Interview, and Designing Machine Learning Systems cover both principles and practical system patterns.Research and Engineering Blogs

Follow OpenAI Research, Anthropic Engineering, DeepMind Blog, and AI2 to stay current with new architectures and applied research.Courses and YouTube Channels

Courses like Stanford CS229 and CS230 build solid ML foundations. YouTube channels such as Two Minute Papers and ByteByteAI offer concise, visual learning on cutting-edge topics.AI Newsletters

Subscribe to The Batch (Deeplearning. ai), ByteByteGo, Rundown AI, and Ahead of AI to learn about major AI updates, model releases, and research highlights.Influential Research Papers

Key papers include Attention Is All You Need, Scaling Laws for Neural Language Models, InstructGPT, BERT, and DDPM. Each represents a major shift in how modern AI systems are built and trained.

Over to you: Which other AI resources will you add to the list?

The Pragmatic Summit

I’ll be talking with Sualeh Asif, the cofounder of Cursor, about lessons from building Cursor at the Pragmatic Summit.

If you’re attending, I’d love to connect while we’re there.

📅 February 11

📍 San Francisco, CA

Why Prompt Engineering Makes a Big Difference in LLMs?

LLMs are powerful, but their answers depend on how the question is asked. Prompt engineering adds clear instructions that set goals, rules, and style. This turns vague questions and tasks into clear, well-defined prompts.