|

Almost Timely News: 🗞️ 3 Phases of Optimizing for AI (2025-11-23) :: View in Browser

The Big Plug

🚨 Watch my latest keynote, How to Successfully Apply AI in Financial Aid, from MASFAA 2025.

Content Authenticity Statement

100% of this week’s newsletter was generated by me, the human. You will see Gemini’s output about pizza and activation sequences in the first section. Learn why this kind of disclosure is a good idea and might be required for anyone doing business in any capacity with the EU in the near future.

Watch This Newsletter On YouTube 📺

Click here for the video 📺 version of this newsletter on YouTube »

Click here for an MP3 audio 🎧 only version »

What’s On My Mind: 3 Phases of Optimizing for AI

This week’s newsletter is brought to you by a rant I had at MarketingProfs B2B Forum where tons of people were talking about the alphabet soup that is optimizing your business content for AI, and very few of those conversations were grounded in actual reality. It made me cranky.

So let’s take the rant I had several times and put it in words that are somewhat less profane and more helpful.

Part 1: Definitions and Prerequisites

Let’s start with some table setting. There’s a ton of alphabet soup out there:

GEO: generative engine optimization, quite possibly the stupidest of them all because AI models are not engines in the same sense as search engines

AIO: AI optimization, which is a little better

GAIO: generative AI optimization, which is more specific but sounds like hair product

AEO: Answer engine optimization because GEO isn’t obtuse enough (seriously, who comes up with these?)

SEO: Search Everywhere Optimization, coined by Ashley Liddell of Deviate, which is a pretty good summary

All of these are intended to mean pretty much the same thing, which is optimizing your brand and content to be found by AI. Why so many variations? Blame marketers for that, as individual agencies and people try to establish something they can brand. I lean towards using AIO if I have to use something, but more often than not, I just call it AI optimization.

Next, one of the most important things to remember:

No one other than AI companies knows what actual people type into generative AI tools.

Louder for the back:

NO ONE OTHER THAN AI COMPANIES KNOWS WHAT ACTUAL PEOPLE TYPE INTO GENERATIVE AI TOOLS.

Any vendor who proudly proclaims they know what people type into ChatGPT or similar tools is either:

Lying by varying degrees, OR

Stealing information from AI companies

Neither is a good situation.

On top of that, even for companies attempting to approximate presence inside of a language model (often by running thousands of synthetic prompts/queries), you’re still not going to get an accurate read on what results people get. Here are a couple of simple examples as to why that’s largely a fool’s errand.

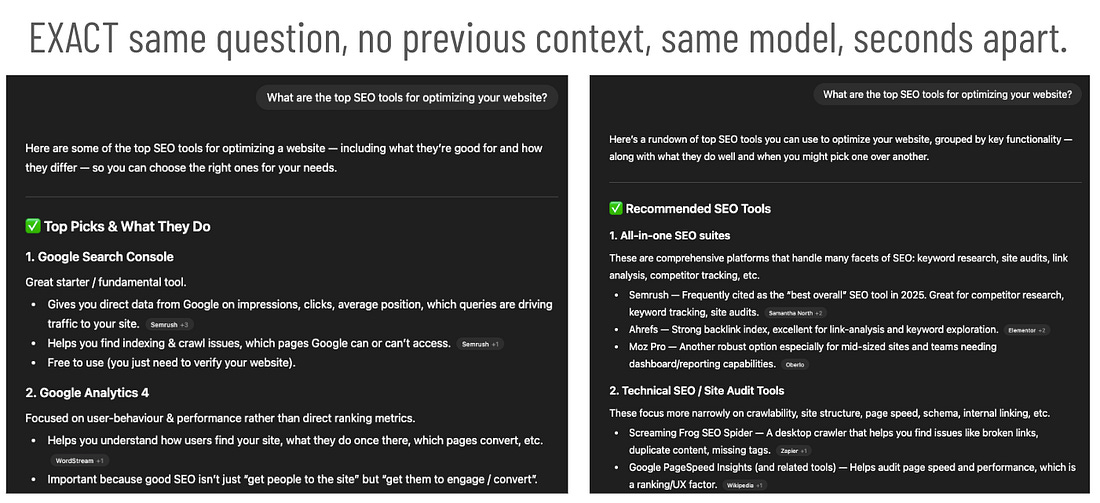

First, all AI tools are probabilistic. Type the same prompt into ChatGPT in different, new chats, and you’ll get conceptually identical answers but specifics WILL vary because that’s the nature of generative AI. Type in “What are the top SEO tools for optimizing your website?” and you will get wildly different results every time. Sometimes you get Semrush or AHREFS. Sometimes you get Google Search Console. Sometimes you get waffling.

Here is the exact same question with no previous context with memory turned off using the exact same model seconds apart in Chat GPT. Wildly different answers.

Can you factually claim that XYZ brand ranks top in ChatGPT? Absolutely not.

Not only are the tools probabilistic and unable to replicate an answer, but even a single token variation - because of how AI works - can create dramatically different answers. “What are the best SEO tools for optimizing your website” and “What are the top SEO tools for optimizing your website” will yield different answers, even though to us they should be identical. To us, they pretty much mean the same thing. To AI models, they are different. They will generate different results when you ask that question with the one word difference.

On top of that, AI tools now often have model switching built into them. Tools like Microsoft Copilot and ChatGPT will automatically select a model based on their estimated difficulty of the task, and depending on where in the conversation you ask for something, you will get different models answering your question. ChatGPT has 6 different models under the hood - GPT-5.1, 5.1-mini, 5.1-nano, 5.1-chat-latest, 5.1-codex-high, 5.1-codex-mini.

Which model is answering the user’s question? You don’t know. Neither do I. And when you ask these individual models, they give different answers to the same question. Asking a question of 5.1-mini versus 5.1-chat-latest, you’re going to get very different answers. Some even have different technical capabilities than others.

Even when the user is given a choice, there are variations in how the models work. Google Gemini Flash works differently and gives different answers than Gemini Pro. Claude Sonnet 4.5 gives different answers if you have Extended Thinking turned on or off.

And this doesn’t even begin to touch on customization and memory. Features that have been introduced to many AI tools that allow you to personalize and customize them, from giving them cute nicknames to telling them to never use em-dashes, etc. These memories and customizations become part of the overall system prompt, which means that you can’t even reproduce a single sentence questions results from generative AI from user to user because every user is going to have a different set of system instructions and customizations.

When you tell Chat GPT, “hey, remember this about me”, it writes that in its memory, and then that becomes part of every prompt. So that one word difference in the SEO question now could be an 8 paragraph difference from user to user because they all have different memories stored in their ChatGPT or Gemini or Claude. All the major tools provide a support memory, and that is going to completely screw up any ability you have to predict what people are typing in these tools.

So before we proceed, let’s cement this particular piece of knowledge: no one knows what users are typing into AI tools, and no one is likely to approximate it well with any level of credibility.

So what can we know?