| | In this edition, Satya Nadella on Microsoft’s “demand plan,” and a case for tech companies to take m͏ ͏ ͏ ͏ ͏ ͏ |

| |  | Technology |  |

| |

|

- Keeping research close to the vest

- Anthropic tells on itself

- Google’s breakup fight

- Flat-footed robots

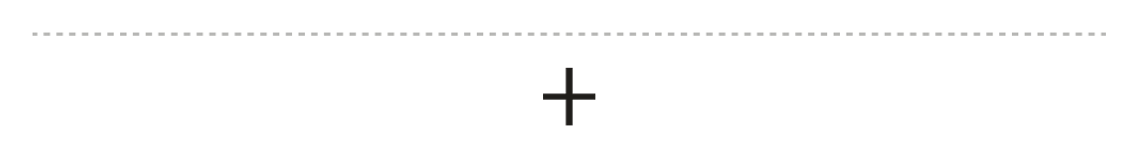

Satya Nadella on what makes the AI boom sustainable, and a new way to dial Jesus. |

|

In a long, revealing interview with Dwarkesh Patel this week, Microsoft’s Satya Nadella gave the best argument I’ve heard for believing that the AI buildout may be sustainable. Nadella was asked whether one AI model would win. “If there’s one model that is the only model that’s most broadly deployed in the world and it sees all the data and it does continuous learning, that’s game-set-match and you stop shop,” he responded. That, he said, is wildly unlikely to happen — and he argued that a focus on the models is the wrong way to look at the industry. To take advantage of AI, developers need a lot of other compute infrastructure built around it — like databases and web services. You might be able to create chatbots without much of this stuff. But the powerful, agentic apps and automations that companies and startups are hoping to create will take a lot more than a GPU. And in this world that Nadella envisions, there will be many, many different kinds of AI models. That requires building data centers that are versatile — and not tied to one kind of AI model. Nadella said that’s why he didn’t opt to build all the infrastructure that Microsoft’s partner, OpenAI, required. That sent OpenAI into the arms of Oracle, a competitor — which doesn’t bother Nadella. “We didn’t want to just be a hoster for one company and have just a massive book of business with one customer. That’s not a business,” he said. Instead of building as much compute capacity as possible, Microsoft has been trying to meet demand for compute as it materializes all over the world. If the company can do this successfully, it can avoid buying a bunch of expensive GPUs that will sit idle while they depreciate in value. By the end of the interview, you get a sense that this reckless AI buildout that we’re all hearing about is really much more like the same old cloud business — but fast-growing, massive, and transformative. Some people may end up getting burned if they aren’t deliberate and methodical. And there could be a black swan event, like some insane, overnight leap in capability. Barring those things, the AI buildout is a lot more pragmatic than it seems. “Ultimately, you’re allowed to build ahead of demand, but you better have a demand plan that doesn’t go completely off-kilter,” Nadella said. |

|

Research should be shared, not hidden |

| |  | Reed Albergotti |

| |

Snorkel AI’s Braden Hancock (R) and Semafor’s Reed Albergotti. Semafor/YouTube. Snorkel AI’s Braden Hancock (R) and Semafor’s Reed Albergotti. Semafor/YouTube.Before the ChatGPT moment, big tech companies and startups were already attracting some of academia’s most talented computer scientists and putting them to work on artificial intelligence. The general agreement was that academics would still be allowed to publish papers on their work. But since the AI race has heated up, publishing out of tech companies has dropped — a close-to-vest tactic that, ironically, may slow down AI progress, even as these companies spend billions on massive data centers to train and process models. Computer scientist and entrepreneur Andy Konwinski looked at the state of computer science research and decided to put $100 million of his money into the Laude Institute, a nonprofit with a public benefit corporation aimed at funding computer science research that might otherwise die on the vine. On the heels of Laude’s recent announcement of its first batch of “slingshots” grants, I spoke to one of its research partners, Braden Hancock, who is also the co-founder of another AI data research lab, Snorkel. I asked Hancock about the state of AI research and where we might see the next breakthroughs. Listen and watch the full conversation on YouTube. |

|

Anthropic’s odd, but effective, hack exposure |

Chance Yeh/Getty Images for HubSpot Chance Yeh/Getty Images for HubSpotWhen it comes to AI marketing, the more apocalyptic the better. So if cybercriminals are going to use your AI tools in a hacking spree, you might as well boast about it. Anthropic revealed Thursday it disrupted what it believes is a Chinese state-sponsored group that succeeded in hacking a “small number” of global targets using Claude Code with minimal human engagement — what it called “an inflection point” in cybersecurity. The hackers’ use of Claude — rather than homegrown models like DeepSeek — highlights Anthropic’s (and the US’) lead in the sector. And it puts the company at the center of a moment cyber professionals have been preparing for: When automated hacks become a bigger threat than human-driven ones, requiring stronger AI-powered defenses. Anthropic has put itself out there in the name of safety, publishing details on what it uncovers about its models that other companies often try to conceal. Earlier this year, it revealed that in experiments, Claude attempted to blackmail a supervisor and fictitious individuals to avoid being shut down. These reports have been in some ways insight into what’s likely happening across AI companies, while also fulfilling Anthropic’s self-described safety MO. It also frames the company as the playground safety captain needed in a developing industry, and using a self-reporting tactic regulators prefer. It seems to be working. None of these disclosures have tanked Anthropic’s reputation — they’ve instead bolstered its status as one of the most transparent players in AI. |

|

As AI reshapes competitive advantage, separating hype from real opportunity has never been more critical. Nate’s Newsletter is a daily brief that does exactly that. Written by Nate B. Jones, an enterprise advisor with 15 years building AI systems at scale, each issue delivers straight-talking analysis, actionable frameworks, and weekly executive briefings. Trusted by over 60,000 professionals, including Fortune 100 leaders — subscribe for free. |

|

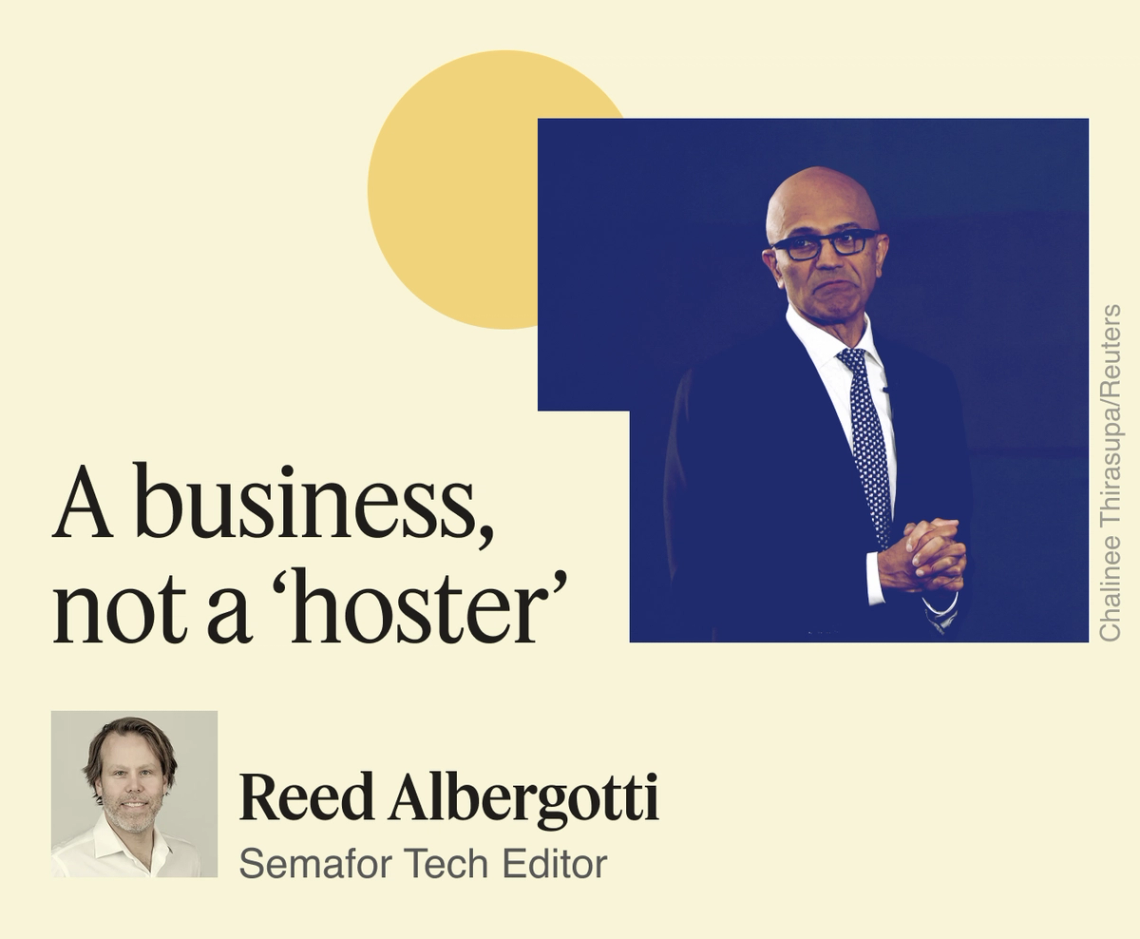

Google says ‘no’ to split |

Google doubled down in its response on Friday to the European Commission’s landmark September decision that found its adtech business breached antitrust rules, by rejecting a breakup of the business — a move that could cause the EU to force a divestiture if left unsatisfied with Google’s remedies. The company did propose product changes in an attempt to address the bloc’s concerns, but argued a “disruptive” breakup “would harm the thousands of European publishers and advertisers who use Google tools to grow their business.” US darling Google has faced several antitrust and competition lawsuits in Europe, as the bloc attempts to both act as tech referee and gain position in a highly competitive global AI race. In Google’s latest European battle, a German court ordered it Friday to pay two price comparison platforms roughly €572 million ($665 million) in total for abusing that market, Reuters reported. A Google spokesperson suggested the problem has been solved and that the company rejects the rulings, telling Reuters: “changes we made in 2017 have proven successful without intervention from the European Commission.” Brussels is also readying a new investigation into the company for allegedly demoting certain news outlets in its search pages, according to the Financial Times. The reported probe could throw a wrench into efforts to ease transatlantic trade tensions, as the EU prepares the next phase of its trade truce with Washington, Bloomberg reported. |

|

Some robots stumble, and that’s okay |

Moskva City News Agency/Reuters Moskva City News Agency/ReutersFalling flat. During one of Russia’s first opportunities to showcase domestic robotic technology to the world, a highly anticipated humanoid robot developed by startup AIDOL toppled over onstage shortly after its demonstration began at a Moscow tech show. The online fanfare accompanying the viral video overshadowed a still impressive achievement in the early days of humanoids, especially by a team that AIDOL told Business Insider operates independently, without the backing of a large corporation or government funds. It’s a good reminder that while the big brand names of 2025 appear dominant, there’s room for new players. One of them is Andy Rubin, Android co-founder and former Google executive, who has launched a new humanoid robot startup in Japan, The Information reported. Meanwhile, Tesla has set aggressive timelines for its droid Optimus, set to begin production at the end of next year, according to Business Insider. |

|

Is television the final form of all media? Derek Thompson, the co-author of Abundance, podcaster, and Atlantic writer joins Mixed Signals to explain what he sees as the forces behind what Ben and Max keep observing: The way in which podcasts and other forms of journalism appear to be getting their largest audience in an endless, passive feed of videos first observed by analysts of 20th century television. Derek discusses all that, as well as his own turn toward independent media and his personal pivot to video. |

|

Screenshot/Text With Jesus Screenshot/Text With JesusJesus is just a text away. An app that allows users to converse with a chatbot trained on the Bible, called Text With Jesus, has created a new way to “engage with your faith,” the app’s description says. Users can share daily stressors or ask for guidance, and the bot will respond with Bible verses and their interpretations and prayers. Available personas include Jesus and the apostles, with the Three Wise Men coming soon. Conversations with Satan are available for premium users. The app is one of many ways people of faith are exploring letting AI play a role in their spiritual lives. Earlier this year, a Lutheran church in Finland held a service almost entirely prepared by AI. But critics have raised concerns about mixing chatbots with religion and therapy. Pope Leo XIV, much like his predecessor, has warned about the technology, recently saying, “It must not be forgotten that artificial intelligence functions as a tool for the good of human beings, not to diminish them, not to replace them.” |

|

|