|

Stop Agent Hallucinations with Project Rules (Warp University) (Sponsored)

AI coding agents are here, but harnessing that power without agents going off the rails isn’t always simple.

Warp’s Project Rules solves that. Warp is the top-ranked coding agent (outperforming Claude Code, Cursor, and Codex on benchmarks) and is trusted by 700K+ developers.

In this Warp University lesson, you’ll learn how to use Project Rules to give agents reliable context, so they stop hallucinating and start shipping.

Disclaimer: The details in this post have been derived from the official documentation shared online by the Airbnb Engineering Team. All credit for the technical details goes to the Airbnb Engineering Team. The links to the original articles and sources are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them.

For years, companies relied on large, expensive standalone servers to run their databases. As traffic increased, the standard approach was to implement sharding, which involved dividing the data into smaller pieces and distributing them across multiple machines. While this worked for a time, it became a heavy burden. Maintaining shards, handling upgrades, and keeping the whole system flexible turned into a complex and expensive problem.

The last decade has seen the rise of distributed, horizontally scalable open-source SQL databases. These systems allow organizations to spread data across many smaller machines rather than relying on a single giant one.

However, there’s a catch: running such databases reliably in the cloud is far from simple. It’s not just about spinning up more servers. You need to ensure strong consistency, high availability, and low latency, all without increasing costs. This balance has proven tricky for even the most advanced engineering teams.

This is where Airbnb’s engineering team took an unusual path.

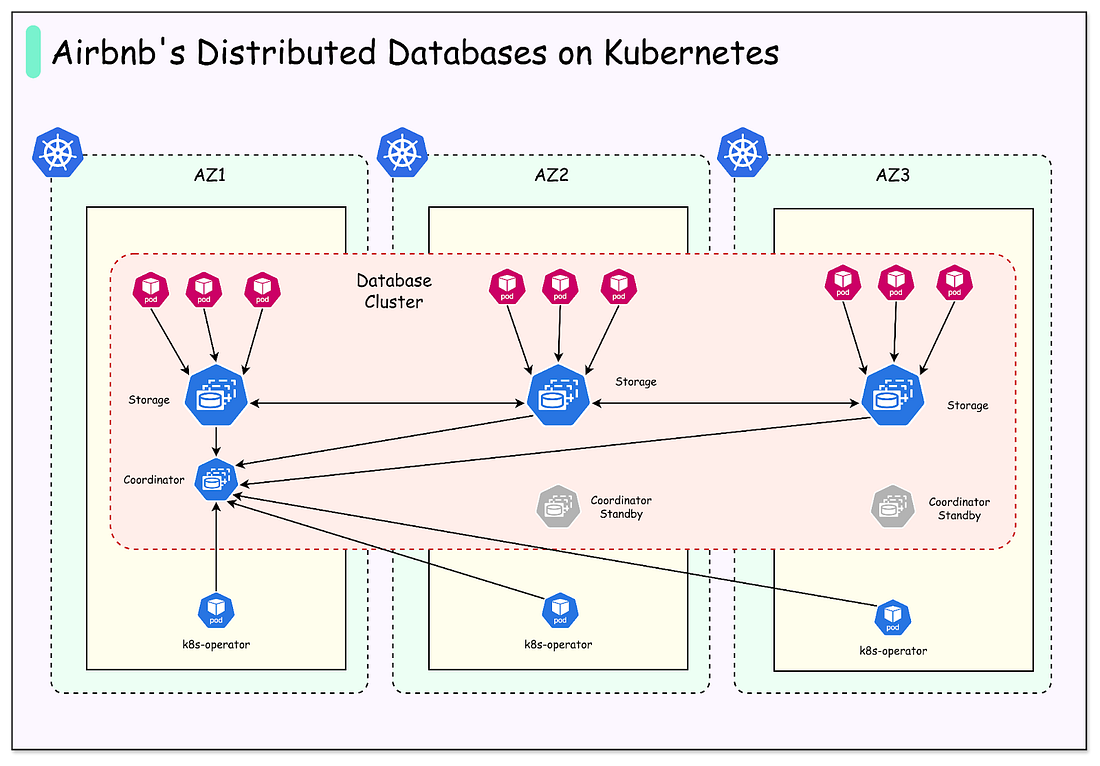

Instead of limiting a database cluster to one Kubernetes environment, they chose to deploy distributed database clusters across multiple Kubernetes clusters, each one mapped to a different AWS Availability Zone.

This is not a common design pattern. Most companies avoid it because of the added complexity. But Airbnb’s engineers saw it as the best way to ensure reliability, reduce the impact of failures, and keep operations smooth.

In this article, we will look at how Airbnb implemented this design and the challenges they faced.

Help us Make ByteByteGo Newsletter Better

TL:DR: Take this 2-minute survey so I can learn more about who you are,. what you do, and how I can improve ByteByteGo

Running Databases on Kubernetes

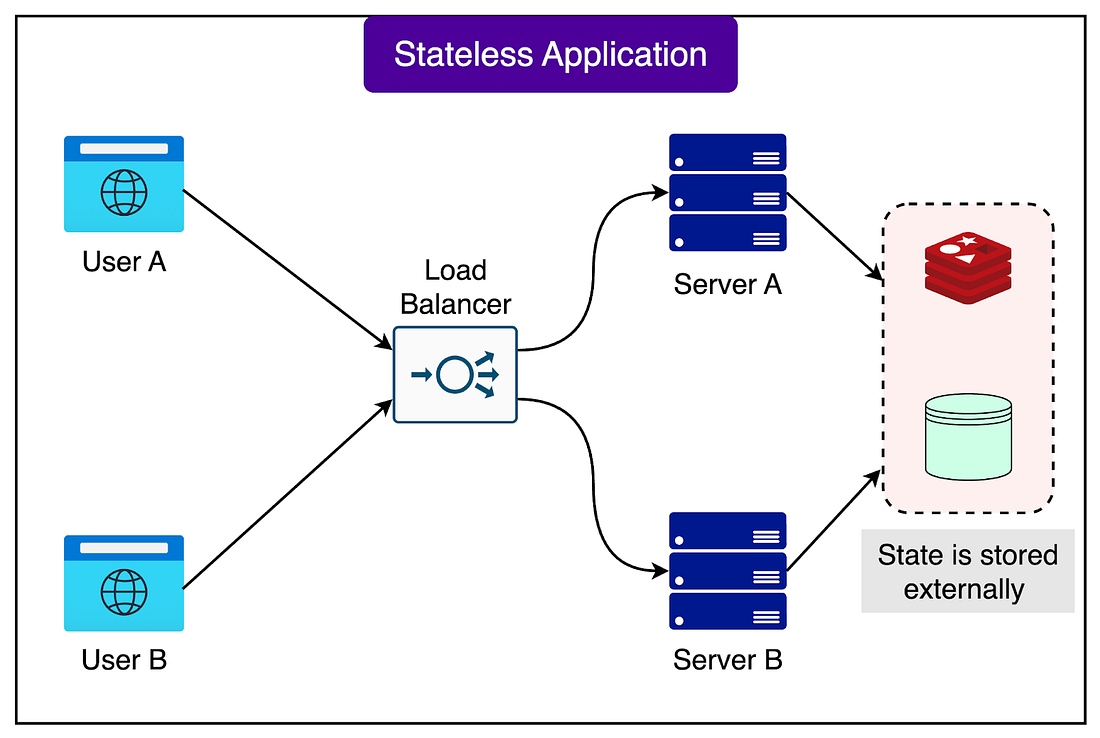

Kubernetes is very good at running stateless workloads. A workload is called stateless when it does not need to remember anything between requests. For example, a web server that simply returns HTML pages is stateless. If one server goes down, another can immediately take over, because there is no important memory or data tied to a single machine.

See the diagram below:

Databases, however, are stateful. They must keep track of data, store it reliably, and make sure that changes are not lost.

Running stateful systems on Kubernetes is harder because when a database node is replaced or restarted, the system must ensure the stored data is not corrupted or lost.

One of the biggest risks comes from node replacement. In a distributed database, data is stored across several nodes. To guarantee correctness, the cluster depends on a quorum. A quorum means that a majority of nodes (for example, 2 out of 3 or 3 out of 5) must agree on the current state of data. If too many nodes fail or get replaced at the wrong time, the quorum is lost and the database can stop serving requests. Kubernetes, by default, does not understand how the data is spread across nodes, so it cannot prevent dangerous replacements.

The Airbnb engineering team solved this problem with a few smart techniques:

Using AWS EBS volumes: Each database node stores its data on Amazon Elastic Block Store (EBS), which is a durable cloud storage system. If a node is terminated or replaced, the EBS volume can be detached from the old machine and quickly reattached to a new one. This avoids having to copy the entire dataset to a fresh node from scratch.

Persistent Volume Claims (PVCs): In Kubernetes, a PVC is a way for an application to request storage that survives beyond the life of a single pod or container. Airbnb uses PVCs so that when a new node is created, Kubernetes automatically reattaches the existing EBS volume to it. This automation reduces the chance of human error and speeds up recovery.

Custom Kubernetes Operator: An operator is like a “smart controller” that extends Kubernetes with application-specific logic. Airbnb built a custom operator for its distributed database. Its job is to carefully manage node replacements. It makes sure that when a new node comes online, it has fully synchronized with the cluster before another replacement happens. This process is called serializing node replacements, and it prevents multiple risky changes from happening at the same time.

See the diagram below that shows the concept of a PVC in Kubernetes: