|

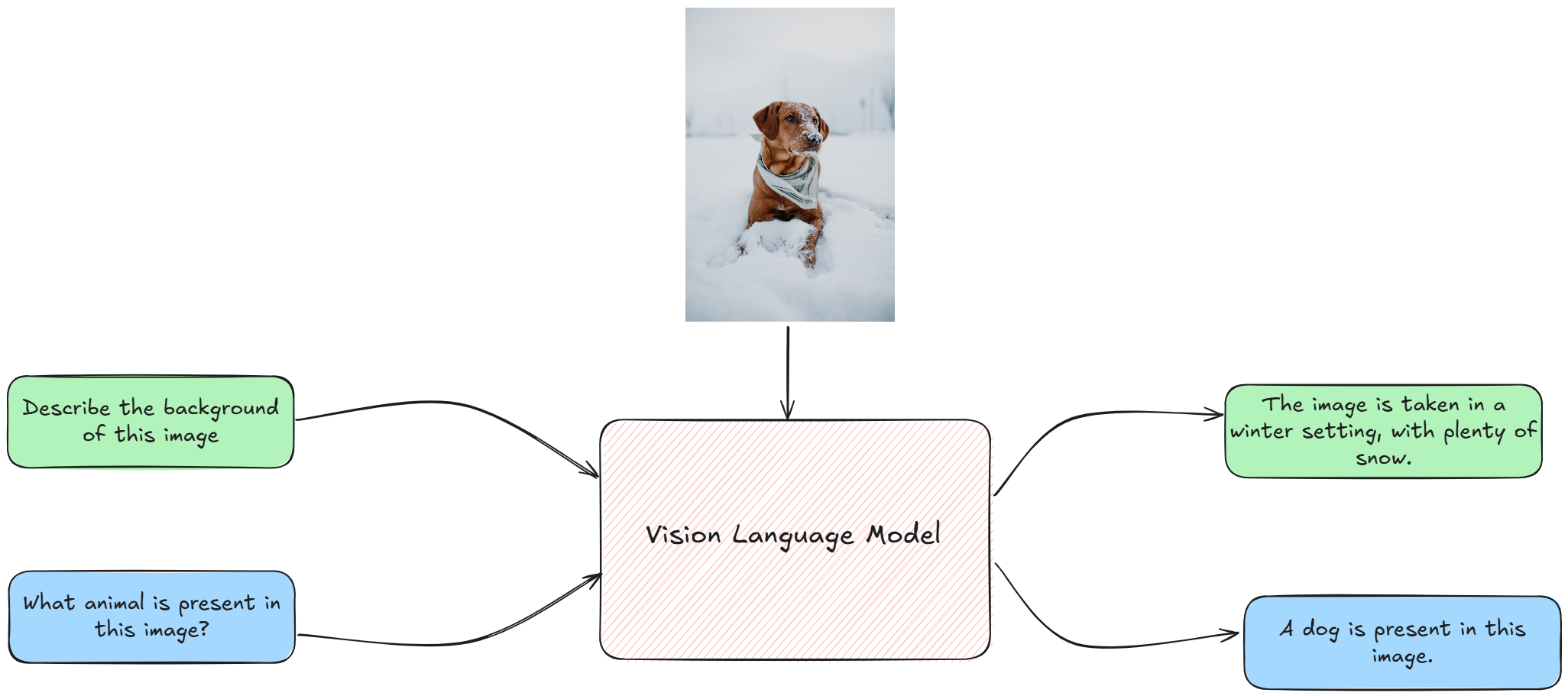

Ciao, Fabio here. Now that you may have upgraded your VLM, it is time to focus a little on the prompts. Let's see why. Vision Language Models (VLMs) are a significant advancement in processing and understanding multimodal data, and they can combine text and visual inputs.

Unlike Large Language Models (LLMs), which handle only text, VLMs are multimodal, so users (us) can address tasks requiring both visual and textual comprehension. This capability is called in several ways, like Visual Question Answering (VQA), where models answer questions based on images, and Image Captioning, which involves generating descriptive text for images. Today I will explain you how we can prompt VLMs for tasks requiring visual understanding, and the different ways in which we can do so. Zero-Shot Prompting This article explores three distinct prompting techniques for Vision Language Models (VLMs), illustrating how the structure of the prompt significantly influences the model's output. The methods range from providing no examples to offering detailed reasoning steps, each with unique advantages. 1. Zero-Shot PromptingIn zero-shot prompting, the VLM is given a task description through a system and user prompt along with an image, but with no prior examples of how to complete the task. The model relies solely on its pre-existing knowledge to generate a response. This method is advantageous for its simplicity, as a well-crafted prompt can yield good results without the need for task-specific training data. PROMPT: Please analyze the following image 2. Follow-up-questionsIt may look dumb, but visual chat application can work with one image at the time (at least the ones we installed on our pc). So analyze can be insufficient. A good initial or follow-up prompt can be: PROMPT: Describe in details [this part of] the image (or this aspect of the image) 3. Styles and colorsIf you are a graphic designer and you want to use an image as a canvas or model to create something new from it, you need to get as much information as possible about the style, colors, palette and composition. So a good prompt (as a follow up) can be: PROMPT: Describe the style, composition and main color palette of the image and how it conveys the message 4. Meaning and understandingIf your intent is to use Visual Models to understand graphs, charts or infographic, after the first description you need a follow up question related to the meaning of the figures, the trend line and so on. So a good prompt (as a follow up) can be: PROMPT: What is the meaning of this chart/ infographic? How do you explain the [xxx] figures? It is time for you to put all into practice. In fact, there is no better way to prompt your VLM than testing it yourself. Some models like specific words, so there is more art than science. This is particularly true for small parameter models, quite sensible to word order and action words. Happy testing! Send me a message if you manage to make it work, of if you find any issues, I will be happy to hear from you. Next week I will tell you more about how to use more powerful tools... always without any subscription costs. till then Fabio - ThePoorGPUguy |