|

|

Software engineering with LLMs in 2025: reality check

How are devs at AI startups and in Big Tech using AI tools, and what do they think of them? A broad overview of the state of play in tooling, with Anthropic, Google, Amazon, and others

Hi – this is Gergely with the monthly, free issue of the Pragmatic Engineer Newsletter. In every issue, I cover challenges at Big Tech and startups through the lens of engineering managers and senior engineers. If you’ve been forwarded this email, you can subscribe here.

Two weeks ago, I gave a keynote at LDX3 in London, “Software engineering with GenAI.” During the weeks prior, I talked with software engineers at leading AI companies like Anthropic and Cursor, in Big Tech (Google, Amazon), at AI startups, and also with several seasoned software engineers, to get a sense of how teams are using various AI tools, and which trends stand out.

If you have 25 minutes to spare, check out an edited video version, which was just published on my YouTube channel. A big thank you to organizers of the LDX3 conference for the superb video production, and for organizing a standout event – including the live podcast recording (released tomorrow) and a book signing for The Software Engineer’s Guidebook.

This article covers:

Twin extremes. Executives at AI infrastructure companies make bold claims, which developers often find fall spectacularly flat.

AI dev tooling startups. Details from Anthropic, Anysphere, and Codeium, on how their engineers use Claude Code, Cursor, and Windsurf.

Big Tech. How Google and Amazon use the tools, including how the online retail giant is quietly becoming an MCP-first company.

AI startups. Oncall management startup, incident.io, and a biotech AI, share how they experiment with AI tools. Some tools stick and others are disappointments.

Seasoned software engineers. Observations from experienced programmers, Armin Ronacher (creator of Flask), Peter Steingerger (founder of PSPDFKit), Birgitta Böckeler (Distinguished Engineer at Thoughtworks), Simon Willison (creator of Django), Kent Beck (creator of XP), and Martin Fowler (Chief Technologist at Thoughtworks).

Open questions. Why are founders/CEOs more bullish than devs about AI tools, how widespread is usage among developers, how much time do AI tools really save, and more.

The bottom of this article could be cut off in some email clients. Read the full article uninterrupted, online.

1. Twin extremes

There’s no shortage of predictions that LLMs and AI will change software engineering – or that they already have done. Let’s look at the two extremes.

Bull case: AI execs. Headlines about companies with horses in the AI race:

“Anthropic’s CEO said all code will be AI-generated in a year.” (Inc Magazine, March 2025).

“Microsoft's CEO reveals AI writes up to 30% of its code — some projects may have all code written by AI” (Tom’s Hardware, April 2025)

“Google chief scientist predicts AI could perform at the level of a junior coder within a year” (Business Insider, May 2025)

These are statements of confidence and success – and as someone working in tech, the last two might have some software engineers looking over their shoulders, worrying about job security. Still, it’s worth remembering who makes such statements: companies with AI products to sell. Of course they pump up its capabilities.

Bear case: disappointed devs. Two amusing examples about AI tools not exactly living up to the hype: the first from January, when coding tool Devin generated a bug that cost a team $733 in unnecessary costs by generating millions of PostHog analytics events:

|

While responsibility lies with the developer who accepted a commit without closer inspection, if an AI tool’s output is untrustworthy, then that tool is surely nowhere near to taking software engineers’ work.

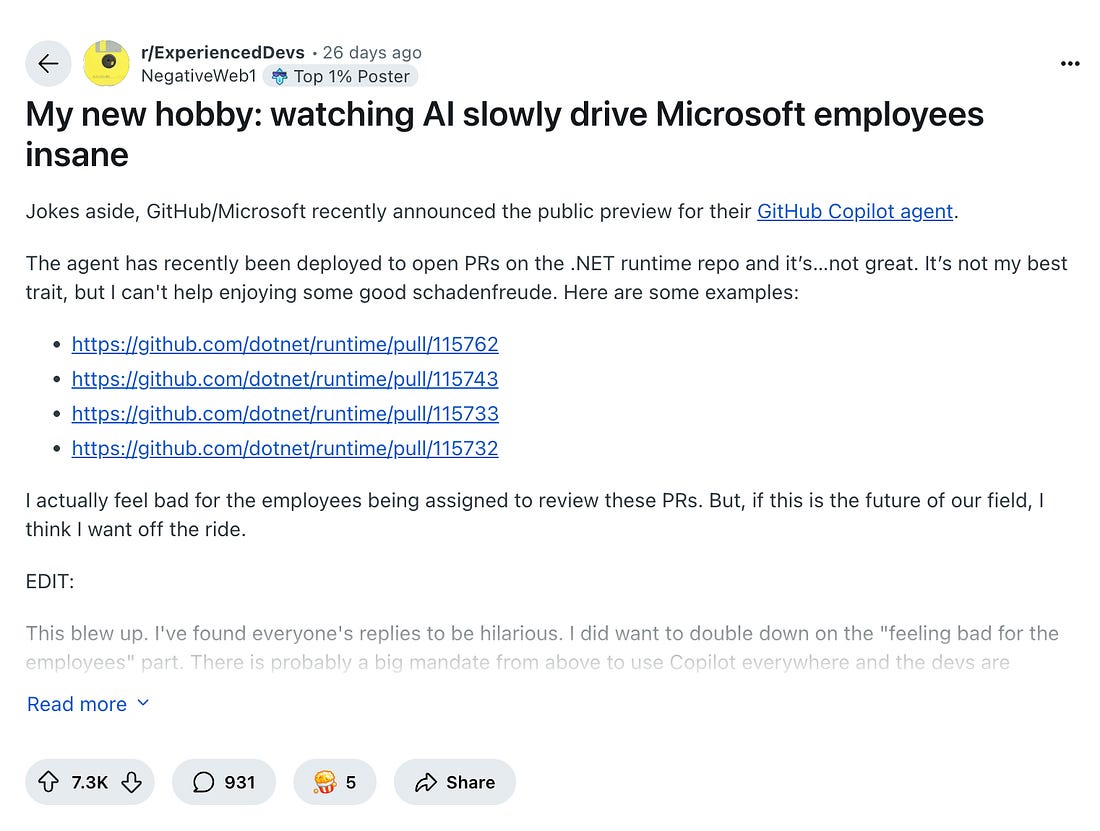

Another case enjoyed with self-confessed schadenfreude by those not fully onboard with tech execs’ talk of hyper-productive AI, was the public preview of GitHub Copilot Agent, when the agent kept stumbling in the .NET codebase.

|